Neuro-Symbolic Concepts

An innovative approach to building AI agents that can learn and reason more like humans.

The pursuit of artificial intelligence that can learn and reason with human-like flexibility remains a central challenge in computer science. A recent pre-print, "Neuro-Symbolic Concepts” contributes to this endeavor by proposing a concept-centric paradigm for AI agent development. This post offers a structured academic summary of the paper, examining its core propositions section by section.

Synthesizing Perception, Composition, and Action

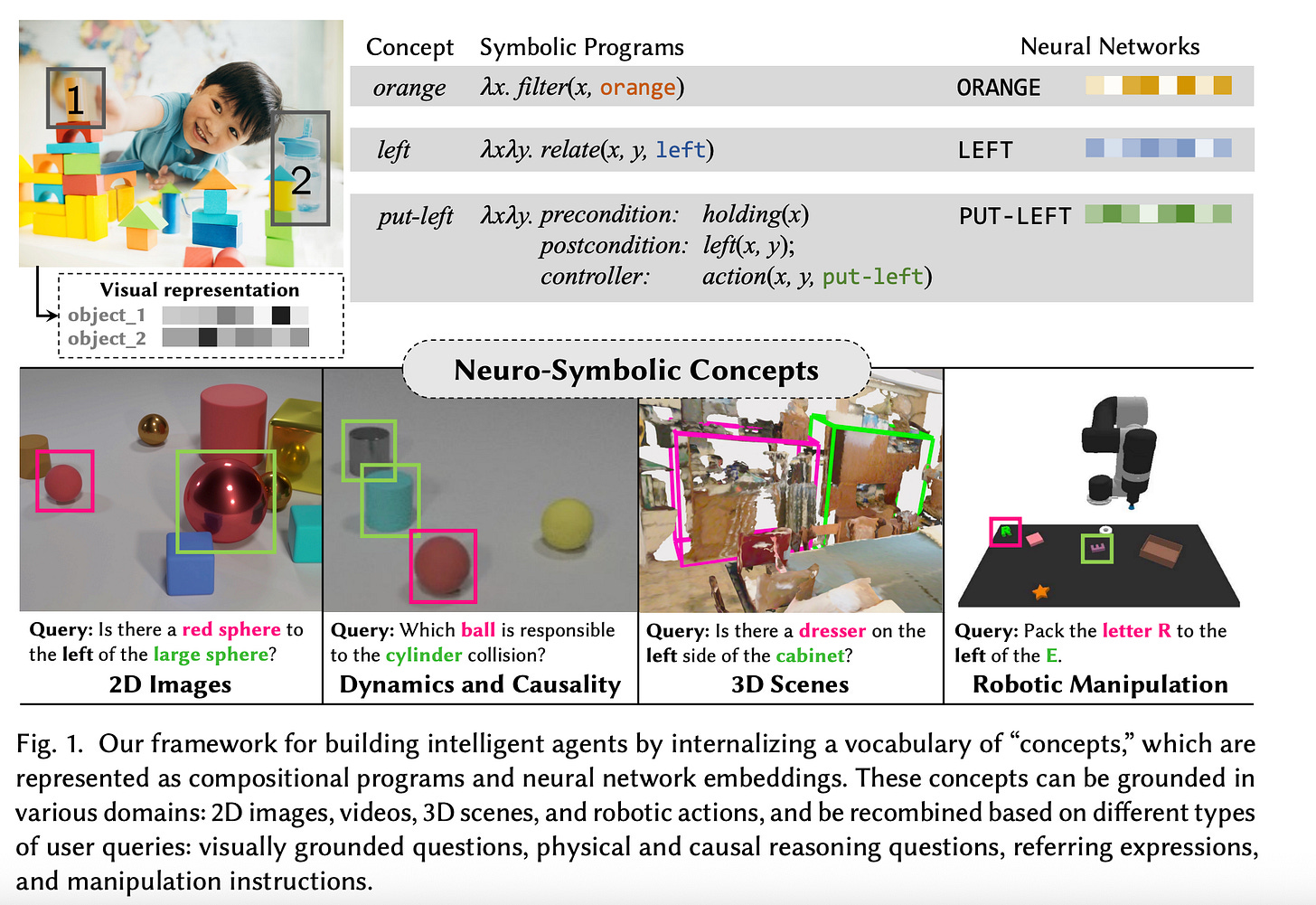

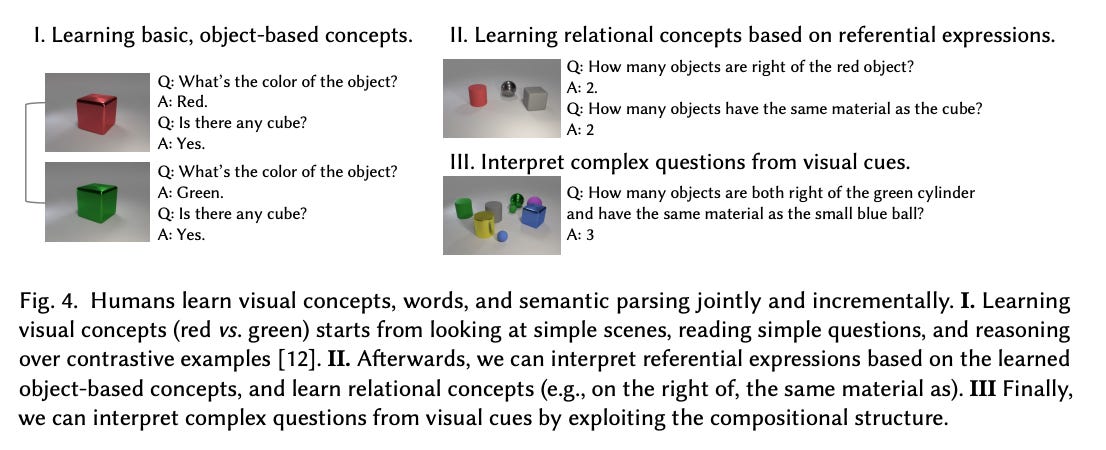

The paper introduces a concept-centric architecture for intelligent agents designed for continual learning and flexible reasoning. Central to this architecture is a vocabulary of "neuro-symbolic concepts"—constructs such as objects, relations, and actions—that are grounded in sensory inputs and actuation outputs. A key characteristic of these concepts is their compositional nature, enabling the generation of novel conceptual structures through systematic combination. The authors elucidate that these concepts are typed and represented via a hybrid approach, integrating symbolic programs with neural network representations to facilitate robust learning and reasoning. The framework's utility is demonstrated across diverse domains, including 2D image and video analysis, 3D scene interpretation, and robotic manipulation tasks, underscoring its potential for efficient learning and recombination of conceptual knowledge.

1. Addressing Limitations in Contemporary AI

The initial section delineates the motivations underpinning the proposed neuro-symbolic framework. It critically assesses the inherent limitations of prevailing AI methodologies, particularly those predominantly reliant on neural networks, with respect to achieving flexible learning and sophisticated reasoning capabilities. The authors posit that "concepts," as fundamental units of knowledge, are pivotal for both human cognition and advanced artificial intelligence. This section thereby establishes the intellectual context and highlights the imperative for paradigms that can seamlessly integrate conceptual understanding into AI architectures.

2. Formalisms and Mechanisms

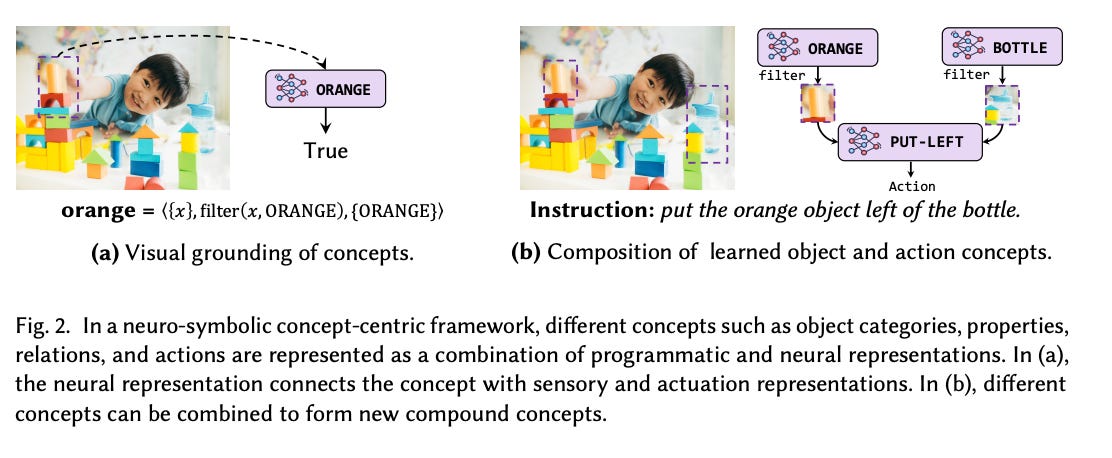

This section provides a formal explication of neuro-symbolic concepts. They are defined as tuples that systematically integrate neural network representations for grounding concepts in perceptual data, alongside symbolic representations that govern their structure and combinatorial properties. The authors detail the representational schema for these concepts and the mechanisms through which they can be composed to form higher-order conceptual structures. This foundational exposition clarifies how the framework bridges subsymbolic pattern recognition with symbolic abstraction.

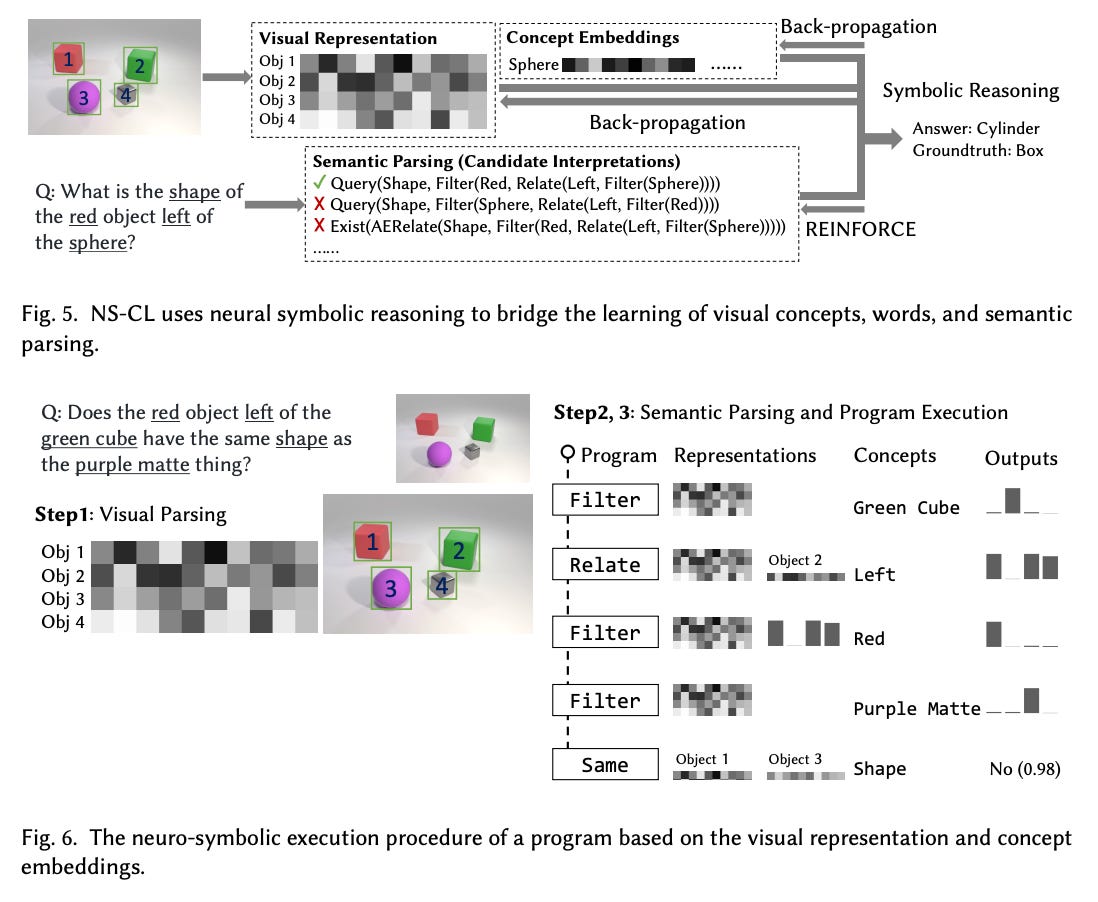

3. A Framework for Neuro-Symbolic Concept Learning in Visual Scene Understanding

While reportedly untitled in the source, Section 3 transitions from theoretical definition to practical application, detailing a specific framework for neuro-symbolic concept learning, with a particular focus on visual scene understanding. The discussion emphasizes the framework's capacity to support critical desiderata for generalist AI agents:

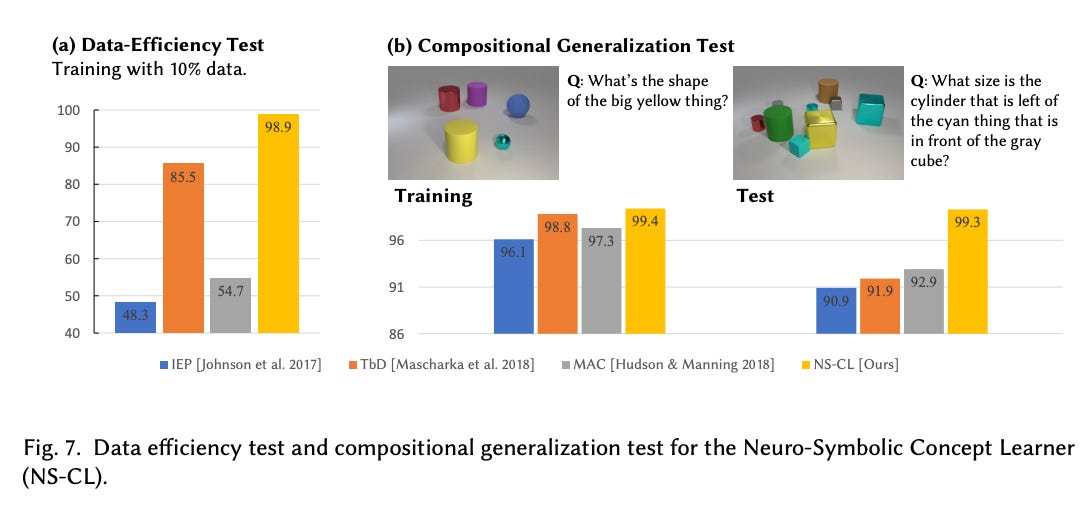

Data Efficiency: Learning effectively from limited datasets.

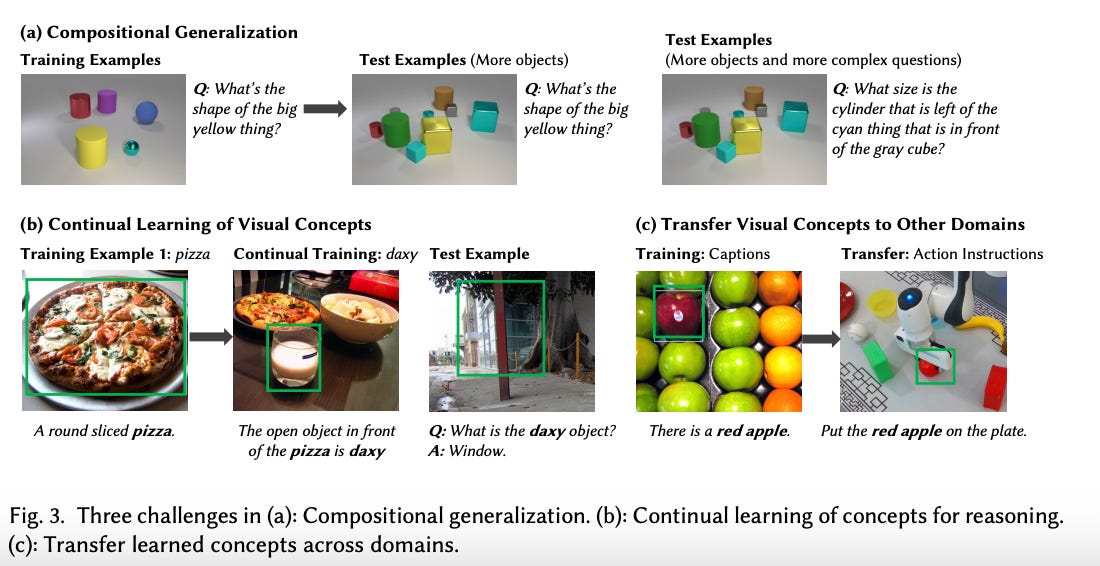

Compositional Generalization: Extending learned knowledge to novel combinations of known primitives at both concept and scene levels.

Continual Learning: Acquiring new concepts without catastrophic forgetting of previously learned knowledge.

Transfer Learning: Leveraging learned concepts across disparate tasks and domains. The framework achieves this by learning the grounding for visual and action concepts and dynamically recombining them based on task requirements, often guided by user queries. The modular decomposition of complex concepts into constituent components is highlighted as a key factor in enhancing data efficiency and enabling the disentangled representation necessary for robust knowledge transfer.

4. Generalizability and Domain Application of Neuro-Symbolic Concepts

Expanding on the previous section, Section 4 (also reportedly untitled) discusses the broader applicability of the general neuro-symbolic framework. It underscores how the inherent representational capabilities facilitate efficient learning from diverse data streams—ranging from image-caption corpora to robotic demonstration data—and enable the synthesis of learned representations to address novel, unseen tasks. This section reinforces the framework's alignment with the overarching objectives of neuro-symbolic AI, which aims to harness the synergies between connectionist learning, symbolic reasoning, and probabilistic inference to develop more comprehensive AI systems.

5. Context, Synergies, and Future Directions

The concluding major section situates the neuro-symbolic concepts paradigm within the broader academic discourse on artificial intelligence. It elaborates on the synergistic potential of integrating neural networks, symbolic reasoning systems, and probabilistic inference models. Critically, the authors also engage with the extant limitations of such approaches, including the challenges associated with reliance on predefined Domain-Specific Languages (DSLs). The discussion extends to prospective research avenues aimed at mitigating these limitations, thereby charting a course for the continued evolution of adaptable and semantically rich AI.

Concluding Remarks

"Neuro-Symbolic Concepts" presents a compelling argument and a structured approach for integrating conceptual learning and reasoning into the fabric of AI agents. By providing a mechanism for grounding symbolic representations in perceptual data and enabling compositional generalization, the authors offer a significant contribution towards developing AI systems that are more data-efficient, adaptable, and capable of deeper understanding across a variety of complex tasks. The outlined directions for future work further underscore the dynamism of this research area.